There Is No AI Security, There's Just Security (Deal With It)

Why Getting the Fundamentals Right Matters More Than Ever

Here's a reality check for my fellow security leaders: while your inbox is flooded with vendors promising to secure your AI initiatives, most organizations still struggle with basic security hygiene. We're living in a world where security teams are being asked to prevent their machine learning models from leaking sensitive data while they still don't have complete visibility into where that sensitive data lives. Let that sink in.

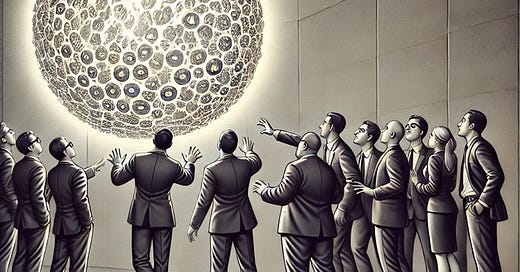

The security industry loves a shiny new problem to solve. Right now, AI security is that shiny object. Your board is asking about it, vendors are rushing to market with solutions, and every security conference has a track dedicated to it. But here's the uncomfortable truth: if you can't enforce basic security requirements or don't have visibility into your environment, no amount of AI-specific security controls will save you.

Look, I get it. AI security is sexy. It's cutting edge. Prompt injection, model poisoning, output manipulation – these are fascinating challenges that deserve attention. But they're advanced problems that sit at the top of a pyramid that needs a solid foundation. And from what I've seen across the industry, that foundation is often built on sand.

This isn't about dismissing AI security concerns – they're real and they matter. But it's about understanding that AI security isn't a separate domain that exists in isolation. It's an extension of your existing security program, and it's only as strong as your weakest link.

You want to protect your AI systems? Great. But unless you've got the boring stuff figured out – access controls, network segmentation, data governance, the fundamentals that make security pros' eyes glaze over – you're just adding complexity to an already fragile ecosystem.

This isn't a hot take; it's a cold, hard reality check. And over the next few minutes, I'm going to break down why focusing on AI security without solid security fundamentals is like trying to build a penthouse on a foundation of toothpicks.

The Sexy vs. The Necessary: Stop Chasing Shiny Objects

Let's talk about what's getting all the attention in security right now. The headlines are full of AI model jailbreaking, training data extraction, and inference manipulation. These are legitimate concerns – don't get me wrong. But they're worrying about installing a bank vault door on a tent.

Here's what's actually keeping security leaders up at night (or should be):

Data governance that's more "wild west" than "well-managed"

And that's not just a metaphor. 54% of organizations admit they've lost control of their data across cloud and on-prem environments, and 57% can't even properly track their data supply chain

Access controls that are about as precise as a shotgun blast

83% of cloud security breaches are tied to access-related vulnerabilities.

Privilege management that's still stuck in the "set it and forget it" era

80% of cyberattacks use identity-based attack methods

Logging and monitoring that's like trying to drink from a fire hose – lots of data, minimal insight

A 2021 IDC study revealed that companies with 500-1,499 employees ignore or fail to investigate 27% of all alerts. This percentage rises to 30% for larger companies with 1,500 or more employees.

Incident response plans that haven't been updated since before ChatGPT was a twinkle in OpenAI's eye

As of 2024, it takes companies an average of 194 days to identify a data breach and 64 days to contain it

The security industry is caught up in an AI frenzy right now. Every conference talk, every roadmap, every security strategy has an AI security component. But here's the reality: if you can't tell me who has access to your training data, what's in it, or where it came from, your fancy AI security controls are like putting a state-of-the-art lock on a cardboard box.

Let's get specific about what matters. Before you even think about AI-specific security controls, ask yourself:

Can you accurately inventory all your data assets?

Do you have real-time visibility into who's accessing what?

Is your security architecture documented and understood?

Can you detect and respond to basic security incidents efficiently?

If you answered "no" or "kind of" to any of these, congratulations – you've just identified where your security focus should actually be. Because here's the truth that nobody wants to hear: your security posture isn't determined by your most sophisticated AI security control. It's determined by your weakest link, which is usually hiding in plain sight in your security fundamentals.

The unsexy truth is that most AI security incidents won't be due to some sophisticated adversarial attack. They'll be because someone left an API key in a public repository, or because your data pipeline is leaking like a sieve, or because you never got around to implementing proper access reviews.

It's time to prioritize the essentials. The fundamentals won't make headlines, and your board probably won't get excited about them. But they're the difference between building security on bedrock versus quicksand.

The House of Cards: When AI Security Meets Reality

Let's get real about what happens when organizations pile AI security controls on top of shaky foundations. It's not just theoretical – it's a disaster waiting to happen.

Picture this: A security team implements sophisticated AI model controls to prevent data leakage through their new large language model. Impressive, right? Except they're feeding this model with data from poorly secured S3 buckets, through unmonitored data pipelines, managed by overprovisioned service accounts.

This isn't hypothetical fear-mongering. The pattern repeats across the industry:

Organizations implementing AI security guardrails while their secrets management is still "it's in a config file somewhere"

Teams worrying about model output sanitization while their data classification is best described as "we think we know what's sensitive"

Companies investing in AI security monitoring while their basic security monitoring still generates more noise than signal

Here's what should keep you up at night: the same fundamental security gaps that have plagued us for decades aren't going away – they're getting amplified. When you add AI systems to an environment with poor security hygiene, you're not just adding new risks; you're creating a complexity multiplier for your existing vulnerabilities.

The worst part? Most organizations know this. They know their foundations are weak. But instead of fixing the basics, they're slapping on AI security controls like strips of duct tape on a leaking dam. It's not just ineffective – it's dangerous. It creates a false sense of security that could make the eventual breach even more devastating.

The reality is that most of the time AI security isn't failing because the controls aren't sophisticated enough. It's failing because it's being built on top of security programs that still haven't mastered the fundamentals. And no amount of AI security magic can compensate for missing the basics.

Building from the Ground Up: Back to Basics (Yes, Really)

Let's talk about what actually works. Not what's trending on LinkedIn, not what's in the latest Gartner hype cycle, but what genuinely makes your organization more secure when implementing AI.

Start here:

Data Governance

Know what data you have

Know where it lives

Know who has access to it

Know how it flows through your systems

Infrastructure Hygiene

Get visibility into your resource configurations

Actually remediate configuration drift (not just detect it)

Move to immutable infrastructure (remember that hype? It's still a good idea)

If you can't go immutable, at least patch your systems consistently

This isn't revolutionary – it's table stakes. Yet it's astonishing how many organizations dive into AI implementations without these basics in place. Your AI system is only as secure as the infrastructure it runs on and the data that feeds it.

Next layer:

3. Identity and Access Management

Implement proper authentication and authorization

Regular access reviews (yes, actually do them)

Principle of least privilege (for real this time)

Service account governance

Once you have these foundational elements in place, then – and only then – should you start thinking about AI-specific controls. Here's how they build on each other:

Traditional Security → AI Security

Data classification → Training data validation

Access controls → Model access governance

Monitoring → Model behavior monitoring

Incident response → AI incident playbooks

Configuration management → Model deployment security

Notice something? Every AI security control depends on a traditional security fundamental. Skip the foundation, and everything above it becomes meaningless.

For those ready to do this right, here's your priority list:

Fix your data governance

Get your infrastructure basics right

Implement proper access controls

Get your monitoring basics right

Document your security architecture

THEN look at AI-specific controls

Yes, this is boring. Yes, this is basic. Yes, this is what you should have been doing anyway. But it's also what will actually make your AI implementations secure.

The Way Forward (Spoiler: It's Not More AI)

Let's wrap this up with some truth that might be hard to swallow: AI security isn't going to save us from ourselves. No amount of advanced security controls will compensate for poor security fundamentals. In fact, implementing AI security controls on top of weak foundations isn't just ineffective – it's actively dangerous because it creates an illusion of security.

Here's what security leaders need to do:

First, stop chasing the next big thing for a minute. Yes, AI security is important. Yes, you need to think about it. But not at the expense of your foundations. The next time someone comes to you asking about AI security controls, ask them about your current security posture first.

Second, be honest about where you are. If you're still struggling with basics like data governance, access management, or configuration control, admit it. There's no shame in having gaps – the shame is in ignoring them while chasing shiny objects.

Finally, embrace the boring. The fundamentals aren't exciting, but they're what make or break your security program. You want to be on the cutting edge of AI security? Great. Get your basics right first. Your boring, solid security program will outperform a flashy, unstable one every time.

The future of security isn't about choosing between traditional controls and AI security – it's about building AI security on top of rock-solid fundamentals. Everything else is just security theater.

And remember: There is no AI security. There's just security. Deal with it.

Sources:

StreamSets survey on data governance challenges

IDC and Ermetic report on cloud security breaches

Precisely’s 2024 Data Integrity Trends report

Fortra’s report on incident response planning for 2025

IDC’s U.S. Critical Start MSS MDR Performance Survey, May 2021

Statista, “Time to identify and contain data breaches global 2024”, July 2024

CrowdStrike’s 2023 Global Threat Report